Machine Learning Researcher

Building brains

Pushing the boundaries of AI at Siemens R&D. Expertise in developing and deploying enterprise-grade AI solutions—from multimodal foundation models and LLM agents to vision architectures—powered by distributed training pipelines and latency-sensitive inference engines.

Academic & Corporate Experience

Professional Experience

Corporate Experience

Machine Learning R&D

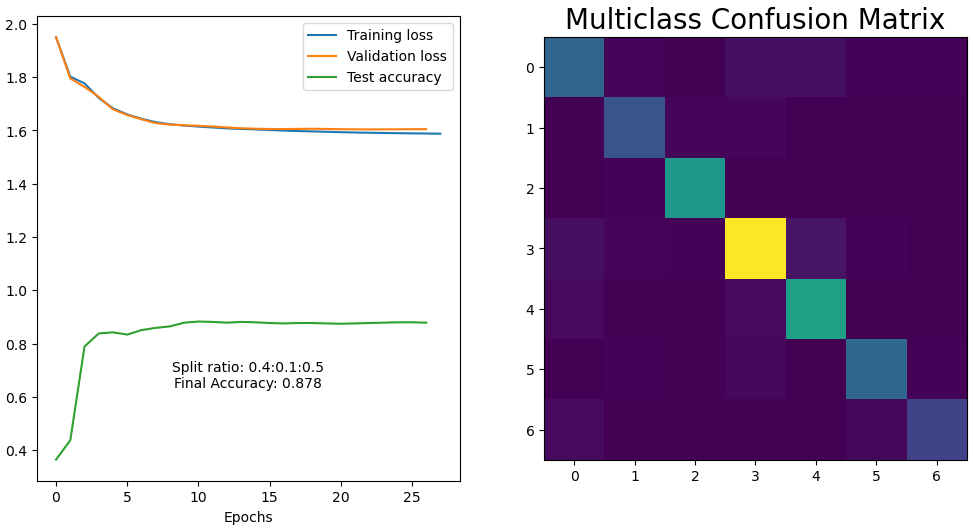

Siemens AG • Berlin

ML Researcher, AI R&D Division: Conduct research on deep learning architectures for computer vision-based detection and semantic interpretation of electrical schematics and symbols. Lead comparative analysis of state-of-the-art object detection frameworks (YOLO variants, Co-DETR, InternImage-H, Faster R-CNN), segmentation models (SAM 2), vision transformers (Dual Attention ViT, DINOv2), and multimodal architectures (Qwen2.5-VL) powered by distributed training compute clusters on Azure ML Studio, achieving >96% mean average precision across multiple model configurations and test datasets.

Machine Learning - TDI Division

Deutsche Bank • Berlin

Featured Work

Explore my latest work

A collection of my projects, research papers, and machine learning expertise.

Research Papers

Latest Paper Explanations

Deep dives into the most influential research papers in LLMs, computer vision and agentic models.

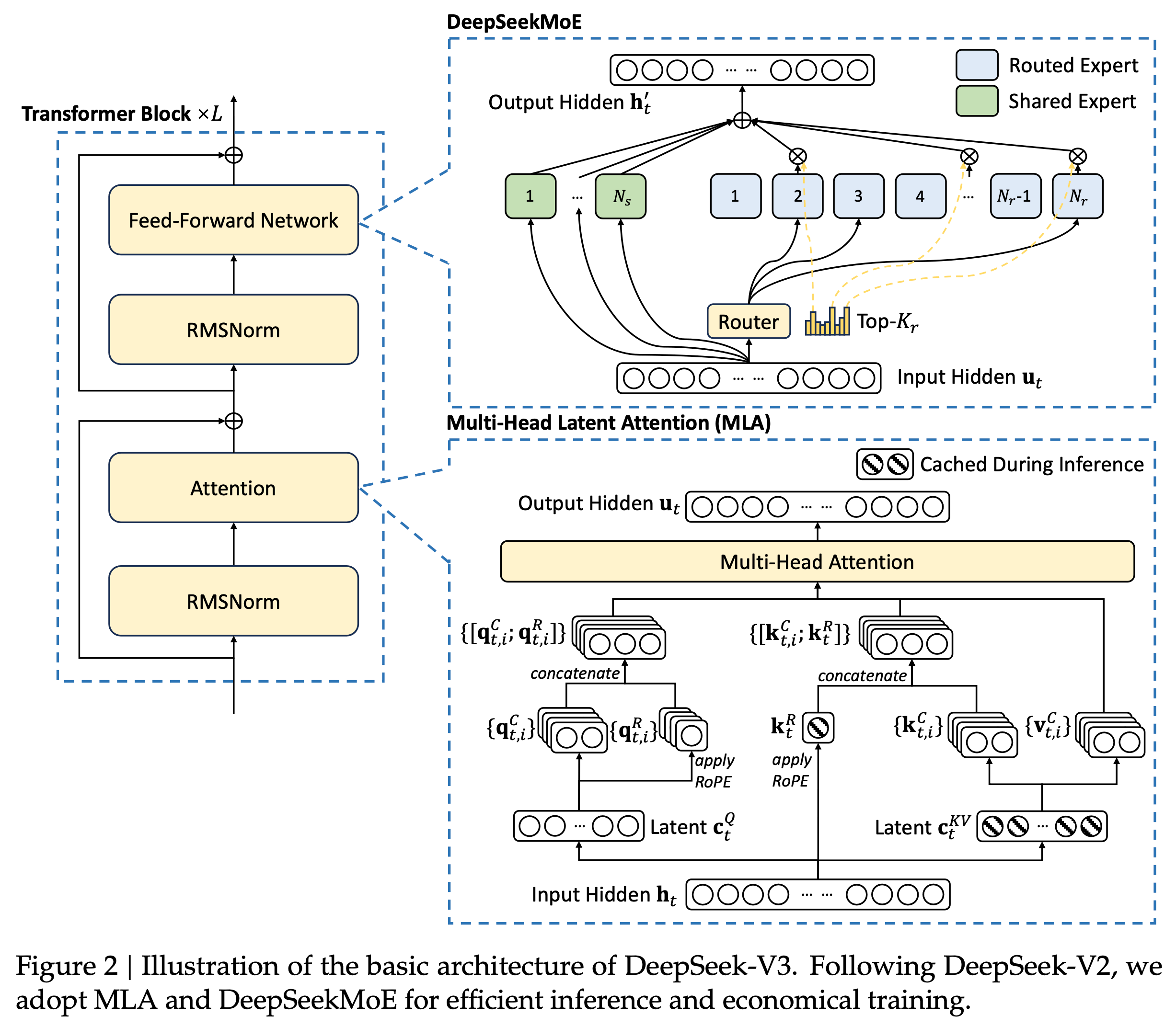

DeepSeek-R1: A Robust and Responsible Language Model

Various Authors

DeepSeek-R1 introduces novel training techniques and architectural improvements to create a more reliable and controllable language model...

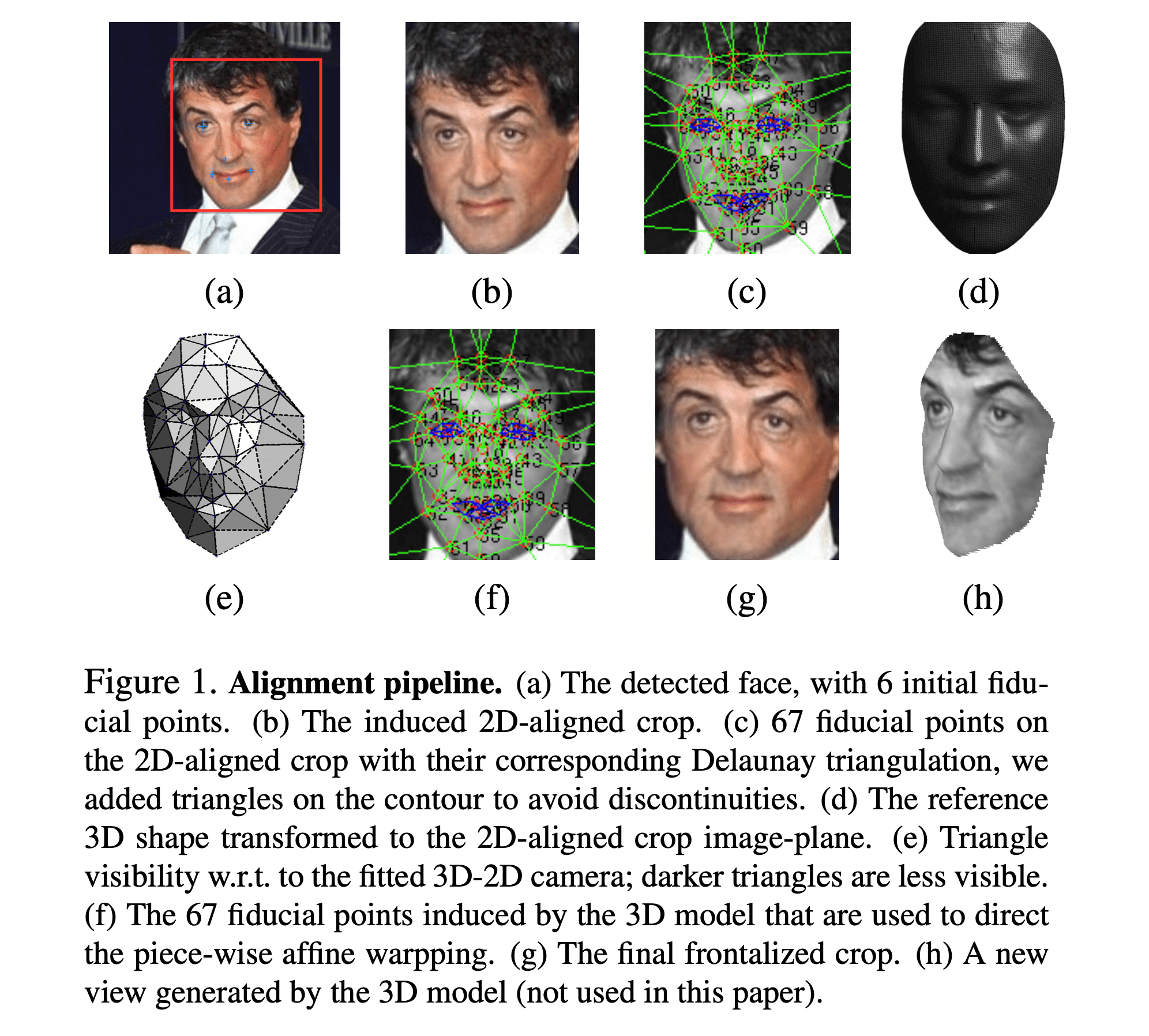

DeepFace: Closing the Gap to Human-Level Performance in Face Verification

Taigman, Yang, Ranzato, Wolf

DeepFace introduces a nine-layer deep neural network architecture that achieves an accuracy of 97.35% on the Labeled Faces in the Wild (LFW) dataset...

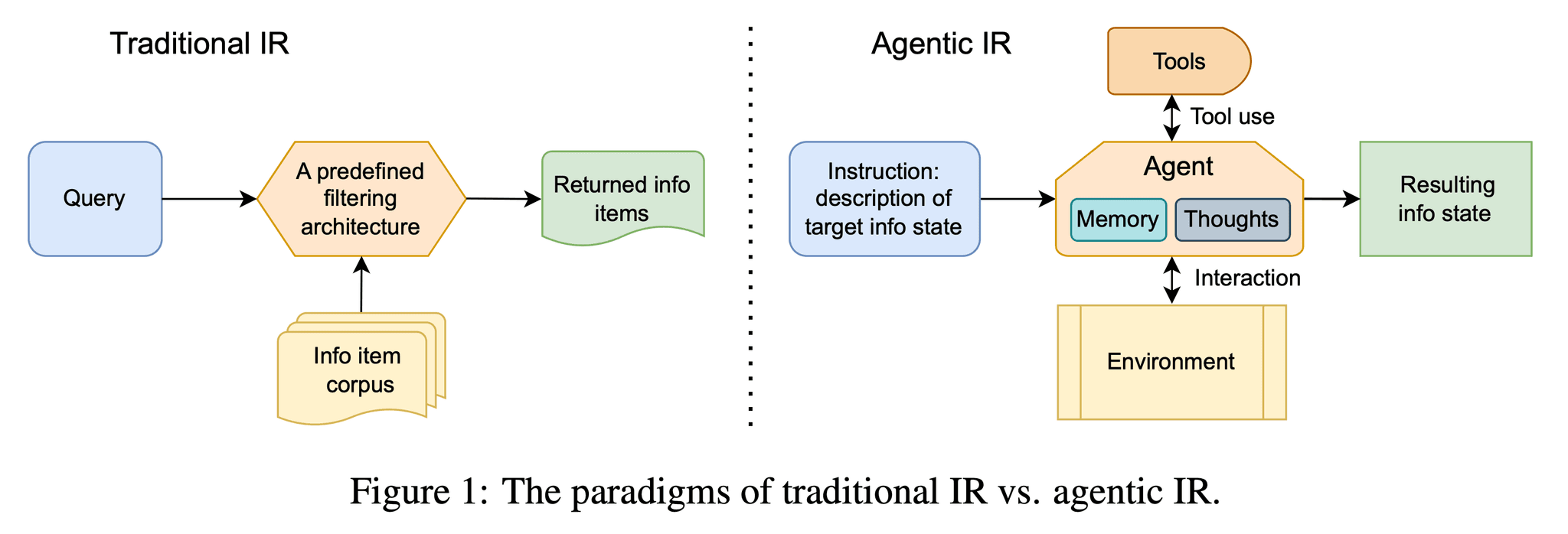

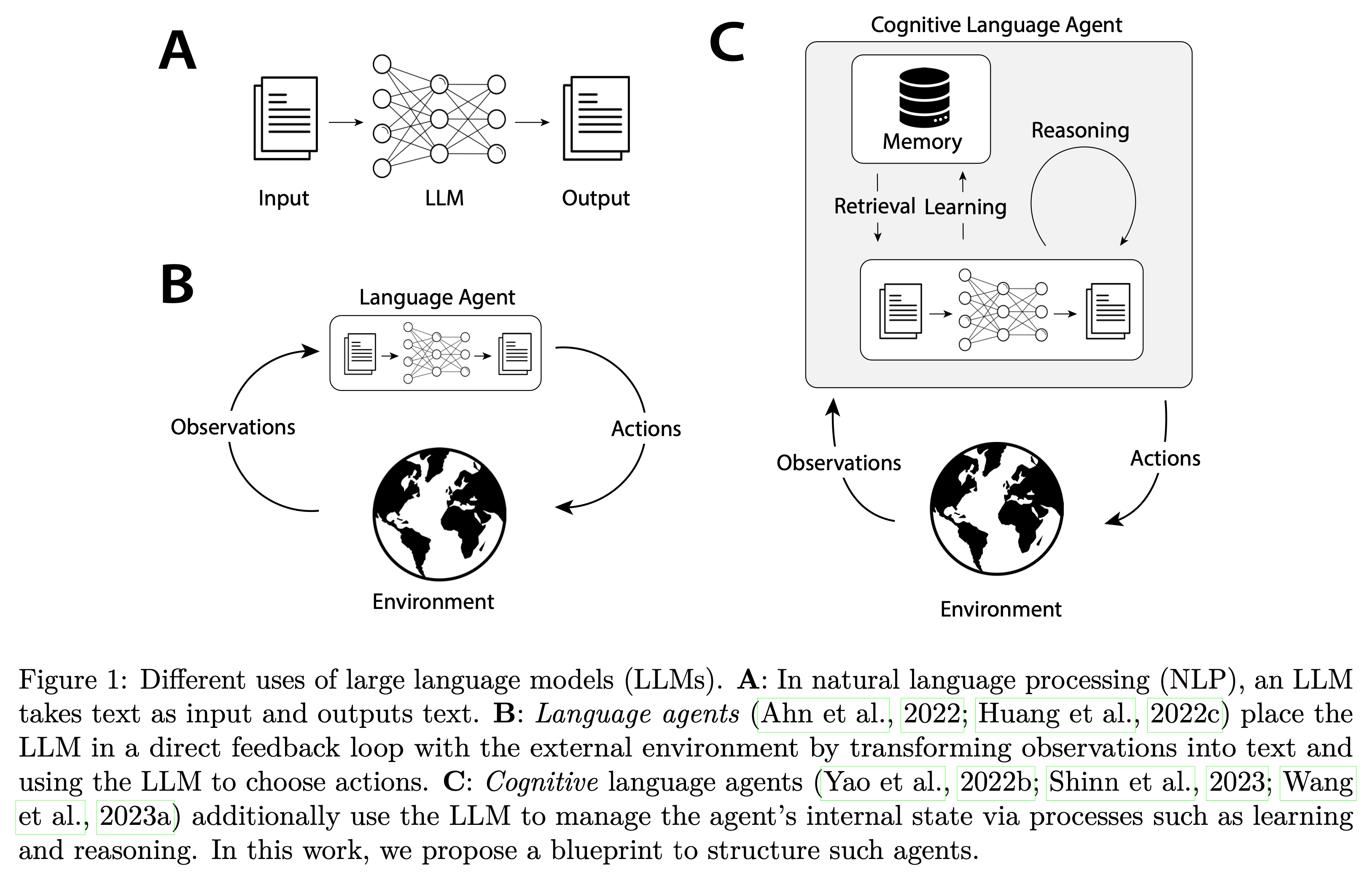

Cognitive Architectures for Language Agents

Sumers, Yao, Narasimhan, Griffiths

This paper presents a systematic approach to building language agents with cognitive architectures, exploring the intersection of language models and decision-making systems...

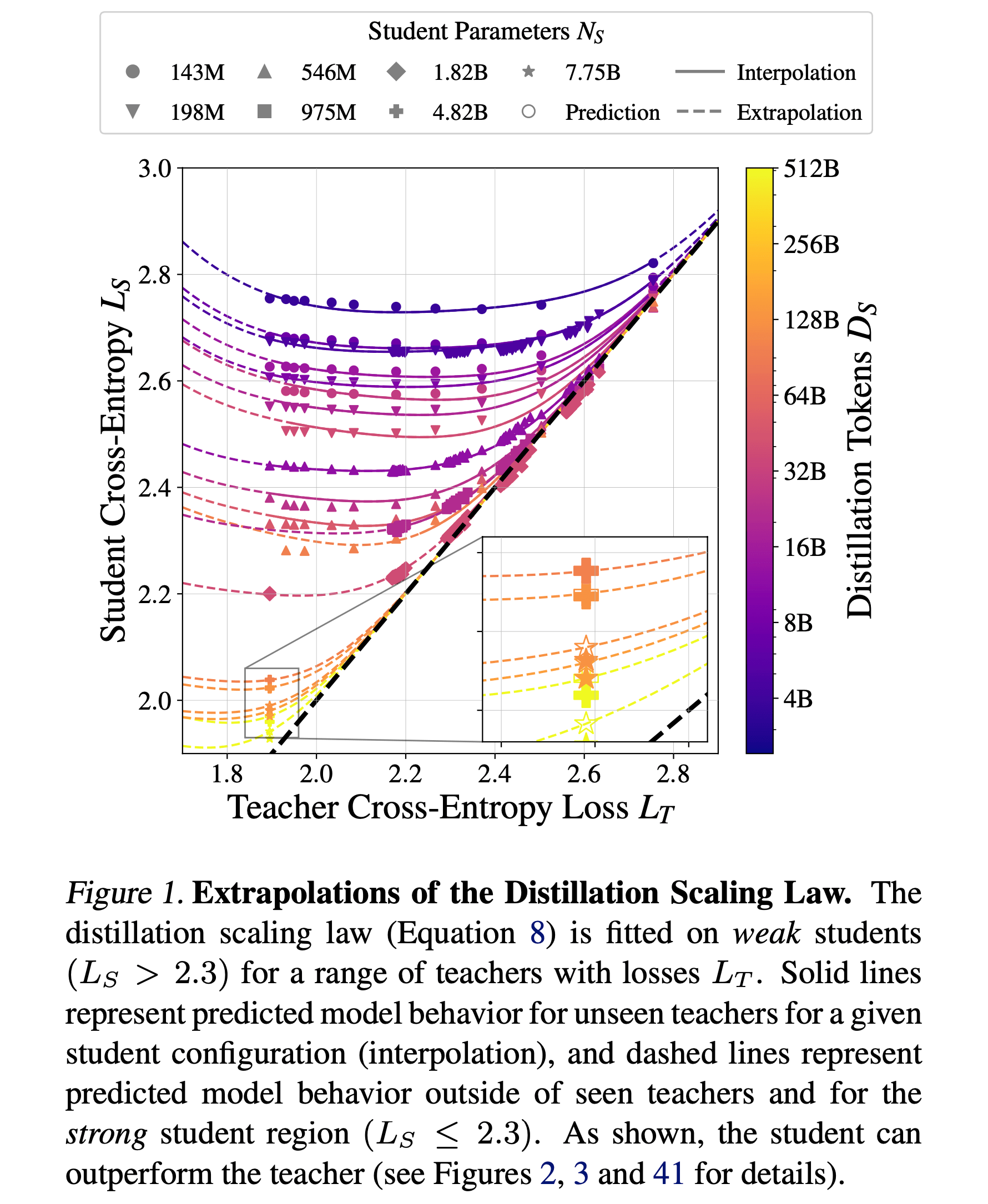

Distillation Scaling Laws

Busbridge, Shidani, Webb, Littwin

This study reveals fundamental patterns in how knowledge distillation effectiveness scales with model size, data quantity, and architectural choices...

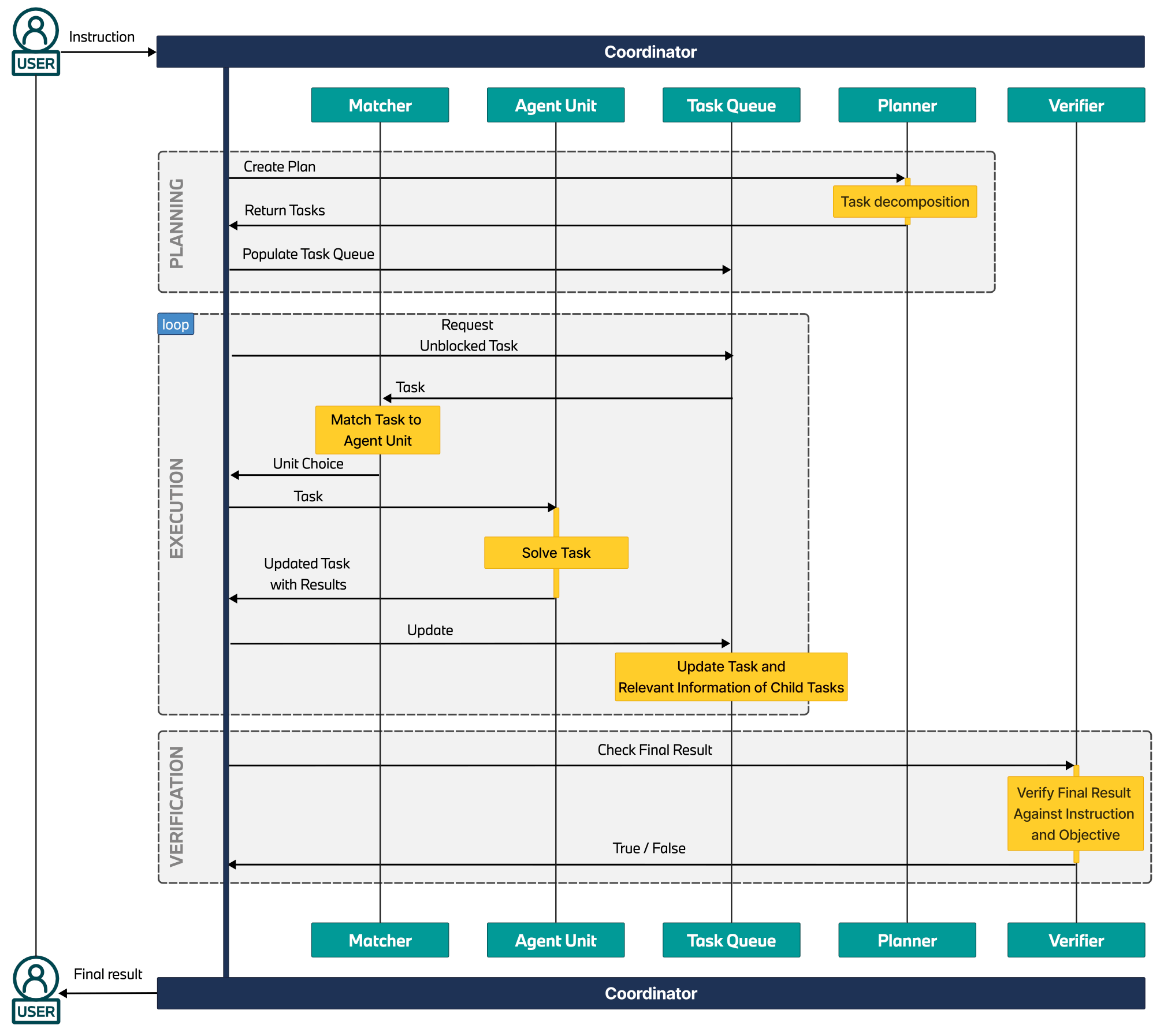

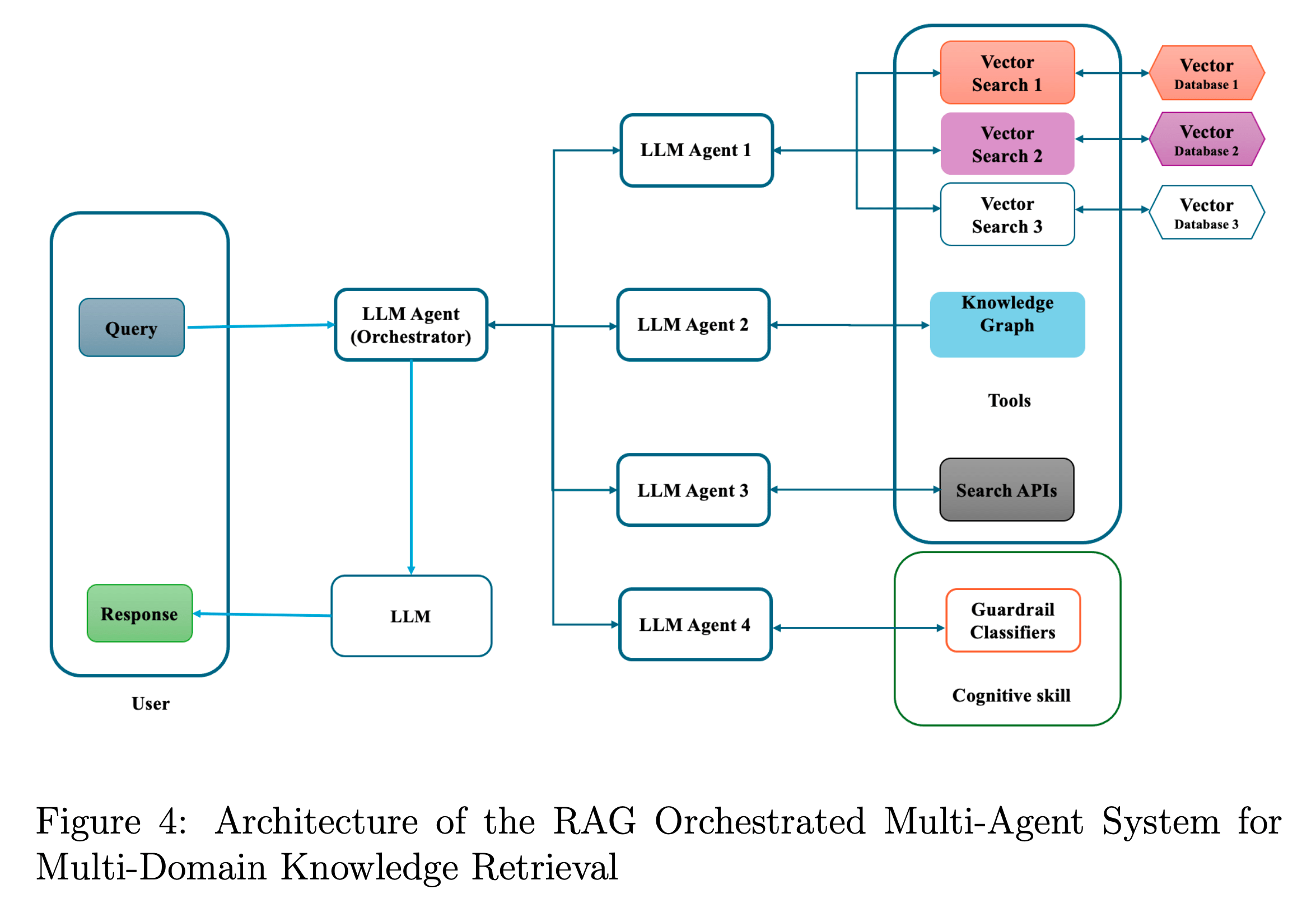

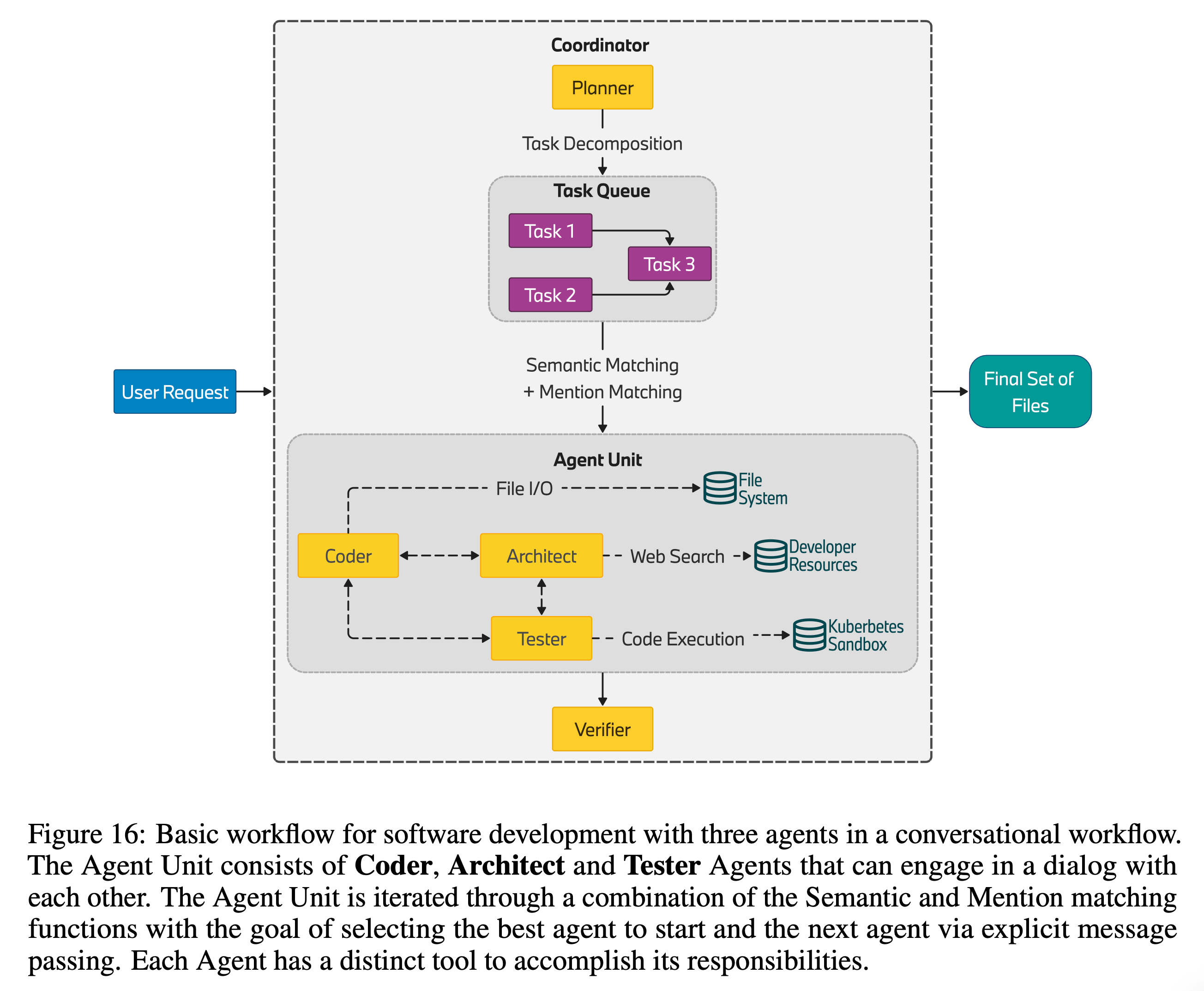

BMW Agents: A Framework For Task Automation Through Multi-Agent Collaboration

Crawford, duffy, Evazzade, Foehr

This framework introduces innovative approaches to multi-agent collaboration, enabling complex task automation through distributed intelligence and coordinated decision-making...

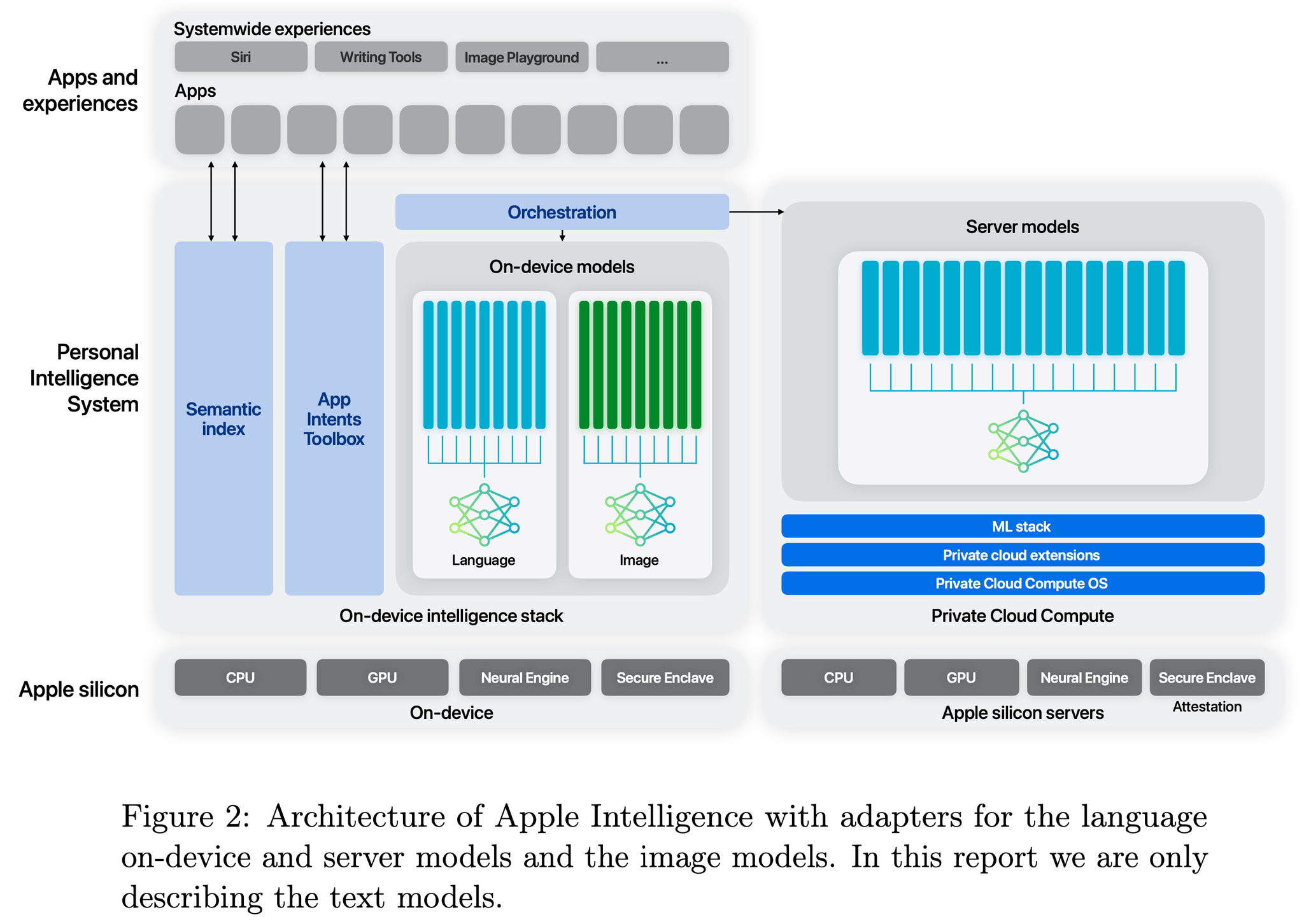

Apple Intelligence Foundation Language Models

Various Authors

Apple's approach to developing and deploying foundation models focuses on privacy-preserving techniques and efficient on-device inference...

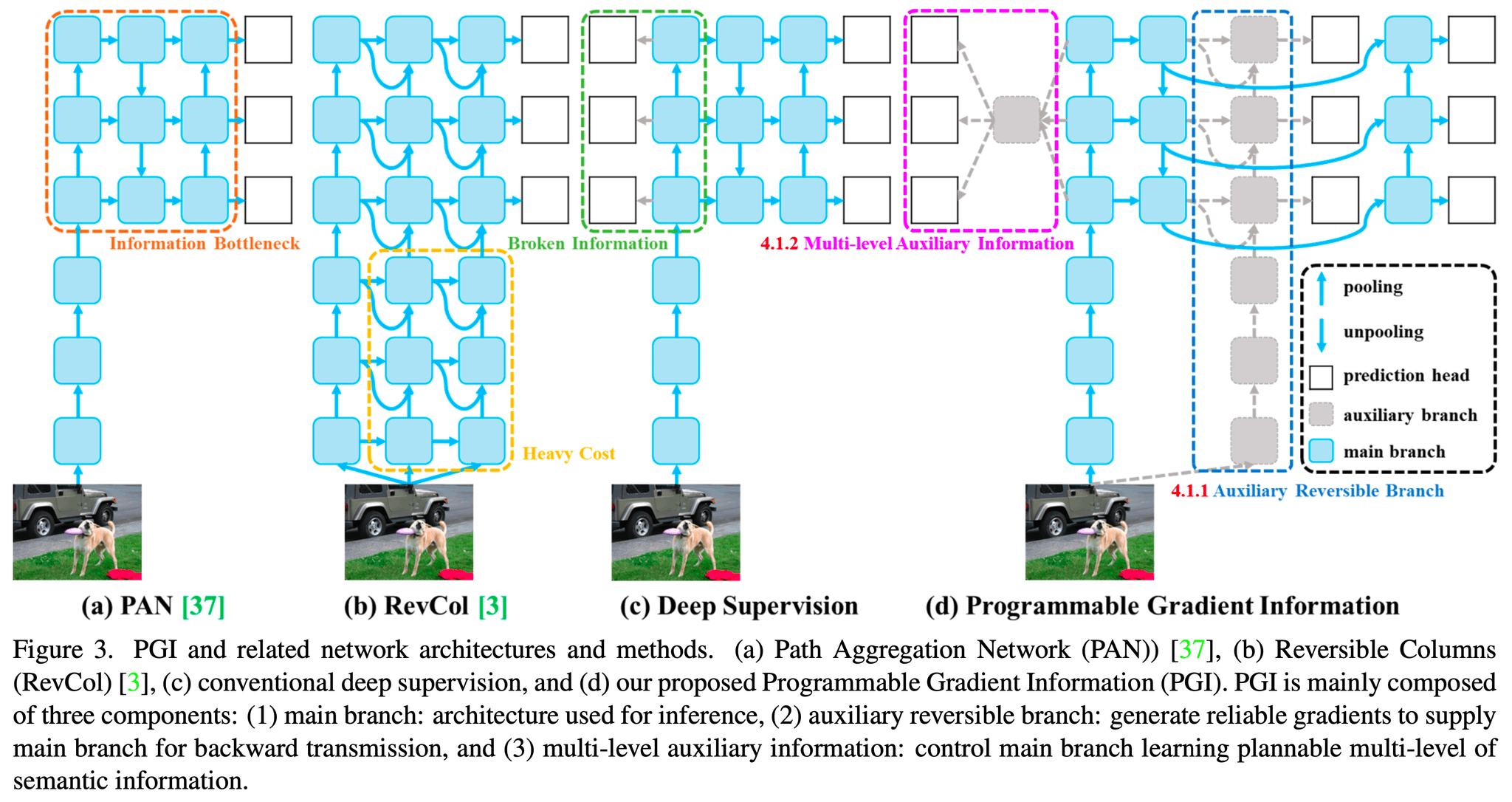

YOLOv9: Learning Using Programmable Gradient Information

Wang, C., Lyu, S., Zhou, X., et al.

YOLOv9 introduces revolutionary techniques for gradient manipulation during training, enabling more effective feature learning and state-of-the-art detection accuracy...

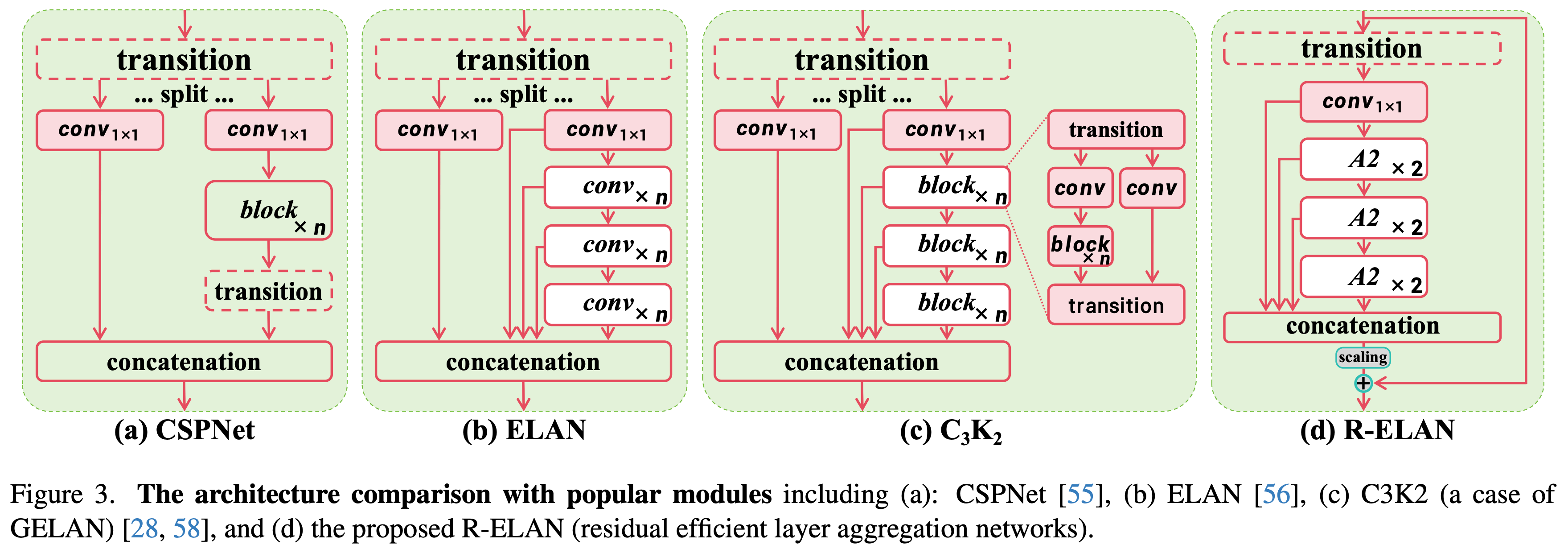

YOLOv12: Attention-Centric Real-Time Object Detection

Wang, C., Ren, Y., Lyu, S., et al.

YOLOv12 incorporates novel attention mechanisms to enhance feature representation while maintaining the speed advantage of the YOLO architecture...

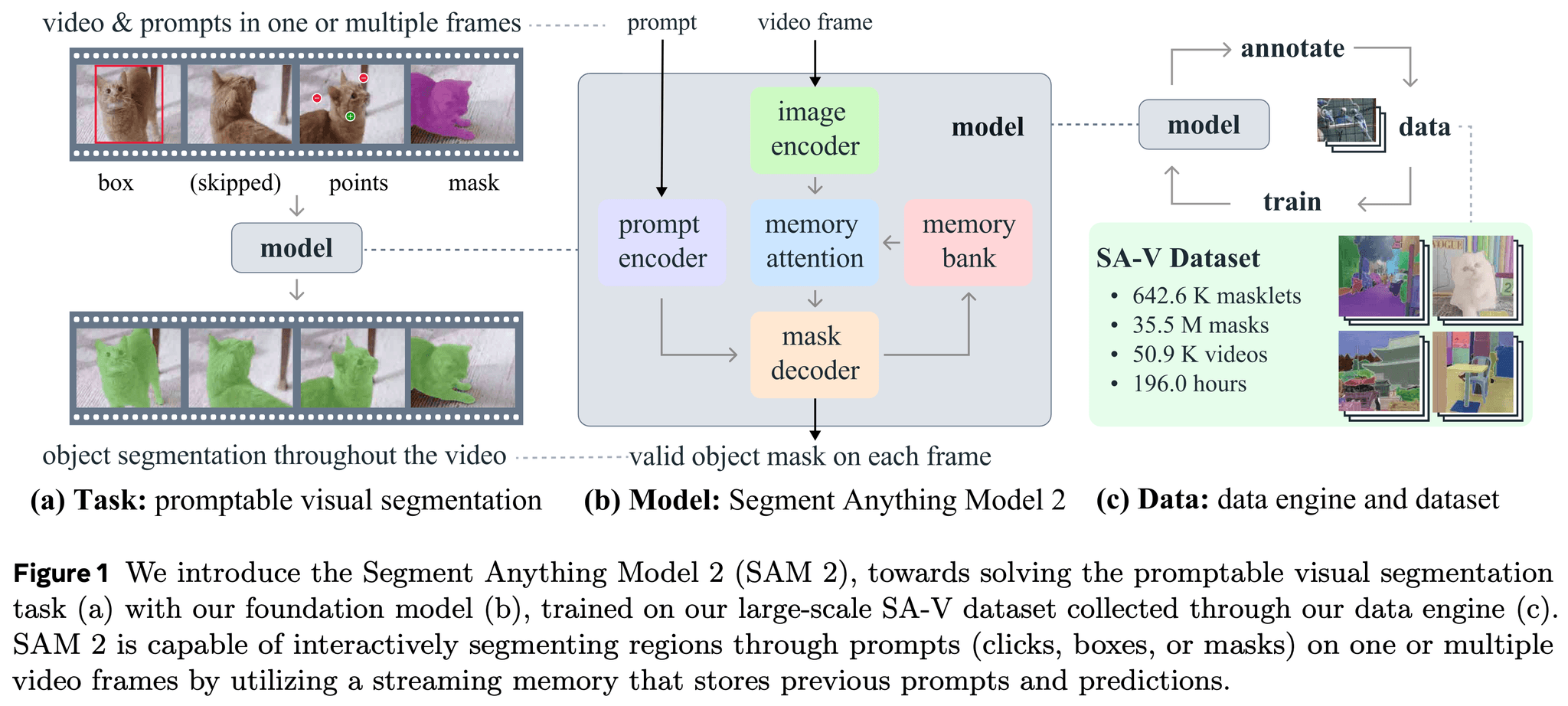

SAM 2: Segment Anything in Images and Videos

Kirillov, A., Mintun, E., Ravi, N., et al.

SAM 2 introduces significant improvements over its predecessor, including better boundary precision, enhanced zero-shot capabilities, and extended functionality for video segmentation...

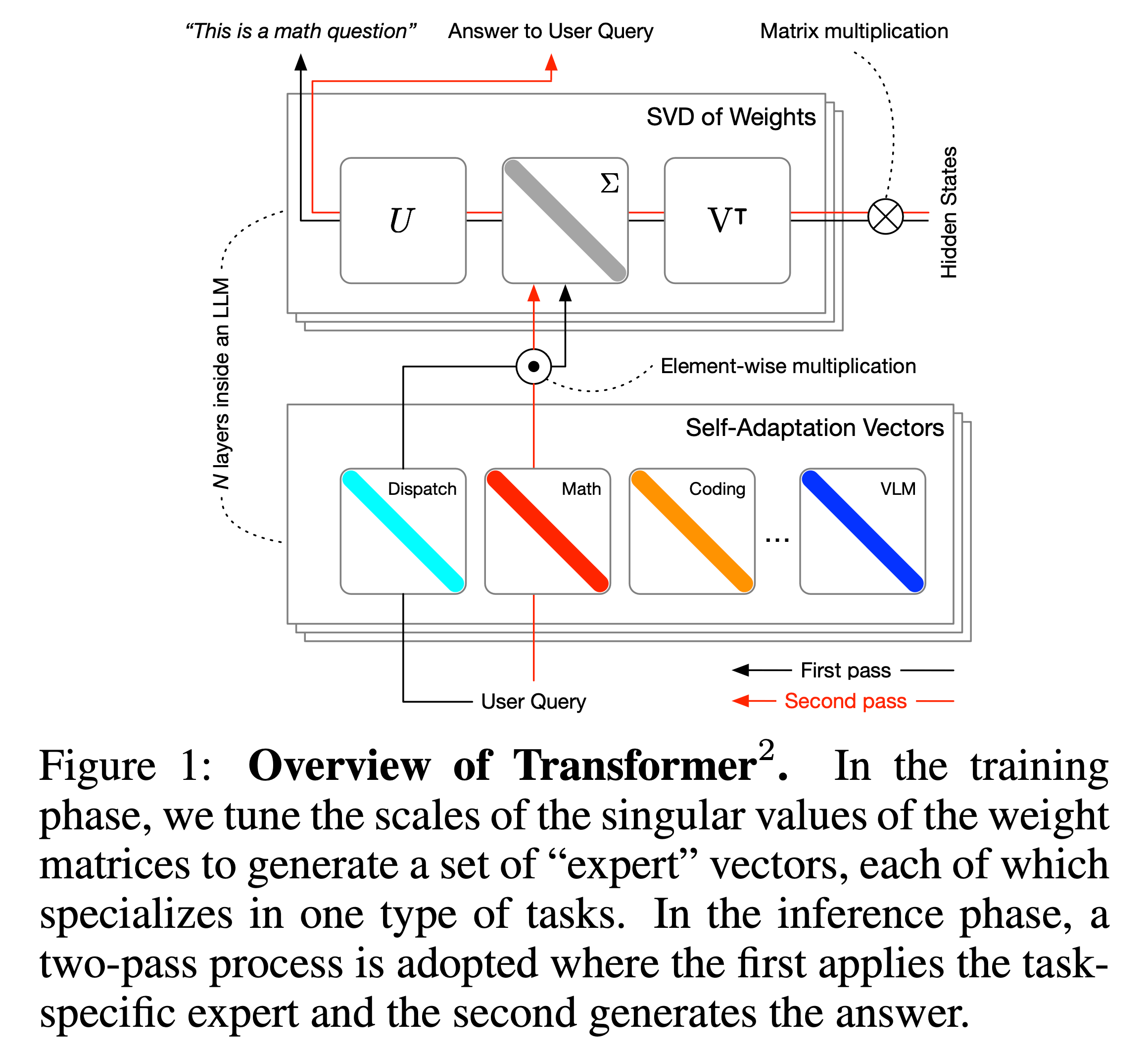

Transformer-Squared: Self-Adaptive LLMs

Ranzato, M., Touvron, H., Grave, E., et al.

Transformer-Squared introduces a meta-learning approach for language models, allowing them to reconfigure their parameters on-the-fly for specific tasks without explicit fine-tuning...